Practical Exercise: Create background removing application for Zoom/Skype

Introduction

This lesson is an exercise for everyone to apply machine learning to regular applications that we might need. We will create an application that can take the input from the webcam, remove the background from persons (without any green screen), and then transfer it to a virtual device that can be selected by other applications like Zoom or Skype. We will use only Linux operating system for virtual device creation and provide instructions on how to create DirectShow virtual camera devices on windows using OBS-virtual-Camera DLLs.

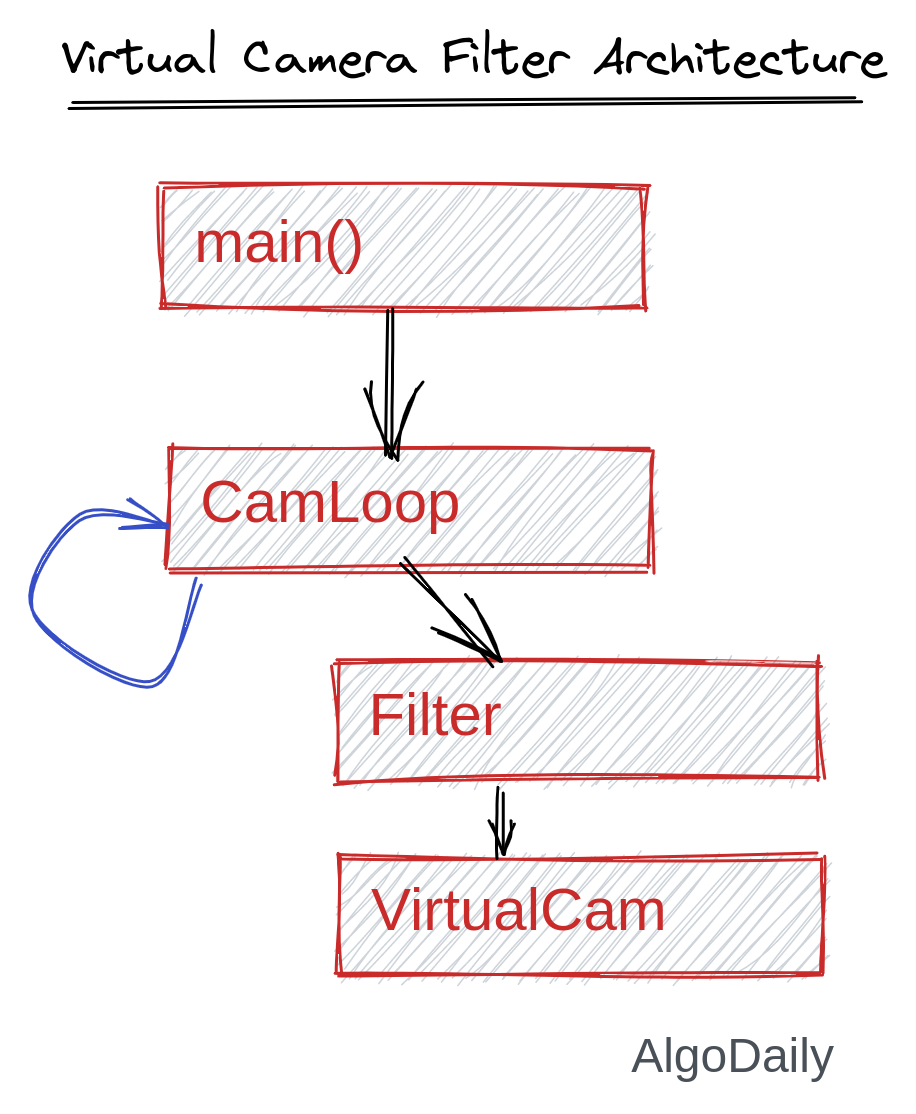

General Architecture

The main architecture of the software is given below:

The complete file structure is provided below so that you understand which file to create where:

| |

Virtual Camera

First, we will create a class names VirtualCam.py which will be responsible for all the virtual camera management. It will have a function named send that we will use to send images to the newly created sink device. First, we need to download a utility script for Linux to manage a /dev/video device. Create a project directory with any name you want. Then download the v4l2.py file from here and place it into the root directory of the project.

Now create another file named VirtualCam.py and write a class template inside that file:

| |

For now, we will create a virtual camera for Linux-based Operating Systems. At the end of the lesson, I will leave some notes if you want to create a virtual camera for Windows. Because configuring the virtual device on Linux is just simpler than the other, I chose this one to implement so we can focus more on the machine learning/image filtering part.

We need to implement all the functions. First, we will initialize a virtual camera and set the correct properties of the video format we will pass to send to the virtual device. This will allow other applications to understand the camera properties and connect to the camera. A loopback device module will be created and registered to the Linux Kernel. We need a program named v4l2loopback-dkms for this. Install the program using this command:

| |

If you are using an Arch Linux-based distro (e.g. Manjaro), you can install the v4l2loopback-dkms package from AUR. There are a lot of instructions to install this package for other Linux distributions. After installing this, we will have a command named modprobe v4l2loopback which will help us to register a new virtual camera device to the kernel.

| |

Note: You will need root privileges to do this. Either you have to always run the python program as root, or you can add pkexec before the command so it will as for admin password when the command runs.

The description of each parameter is given below:

| |

After creating the camera, we set its properties (height, width, format, etc.). And in the __del__ method, we delete the device and remove the v4l2loopback kernel module.

| |

Finally, we implement the convenient send button that will send an image to the device:

| |

Background Remove

A new filter class will be created where we can send an image and it will apply the desired filter on the image and return it. For that, create a file named BackgroundRemove.py and add this template code to it:

| |

We will use the Deeplab v3 segmentation model to segment humans from the background. First, download the saved model graph file from here and extract the tar file. Then rename frozen_inference.pb to saved_model.pb. You can delete the other files in the extracted directory. Keep the extracted directory name to whatever you want, but change the name inside the code I show you below so it can find the saved model file.

We need to load the model file into a tf.Graph() object and use it as a function. Unfortunately, the model has trained on the Pascal dataset a long time ago when there was TensorFlow 1. So we need the tf.compat submodule to load the model using in TensorFlow 1 format and run it in a session (an old way to run a computation graph in TensorFlow 1.x).

So in the __init__ method, we create a tf.Graph(), use it as default, and load the model file into it. Then we create a tf.Session (or tf.compat.v1.Session for TF2) and set the graph as default in it.

| |

The graph we just loaded can do a lot of things besides segmentation. But we will only use the segmentation which is labeled as SemanticPrediction in the graph. We set the index of the output of that label as the first (and only) one. So when we run the session, the output result will return a list with only a single element (the SemanticPrediction). The input to the graph is labeled as ImageTensor. So we set it to in_frame as a list. This is done inside the feed_dict parameter in Session.run() method.

The pixels labeled as a human are labeled as 15 in the segmentation map. We will only keep those pixels of the input image, and turn all other pixels to black. See the code below:

| |

We have all the parts in place. Now we implement the driver program, the main method. In this method, we read the image, apply the background removing the filter and send it to the virtual camera. We can also see the processed picture to know if it’s working using cv2.imshow() function.

| |

Everything is good to go. Now run the program and you will see a window displayed your camera, Background Removed.

Note: If you see the model running very slowly, it is because you do not have a GPU, or you did the install TensorFlow correctly to use GPU. Please go to the Deep Learning with Tensorflow Lesson and try installing TensorFlow the proper way with CUDA enabled.

You can also keep the program running, and start the zoom application. In the settings, you can see your original camera blank and a new device named “vCam”.

To use it on windows, you will need to create a DLL that can register a DirectShow virtual Camera to the device manager. There is a python module for this here named pyvirtual cam. You can determine the operating system using the platform module in python and dynamically set the virtual camera based on which operating system your program is running on. Below is an example of virtual camera that works for both Windows and Linux. For windows, you will need pyvirtualcam and OBS virtual cam DLLs.

| |

Conclusion

I just showed you how you can apply a machine learning model to your application. Instead of Background Removal, you can also apply other filters that are based on Machine Learning. Let us know different ideas you come up with by sharing them in the discussion section.